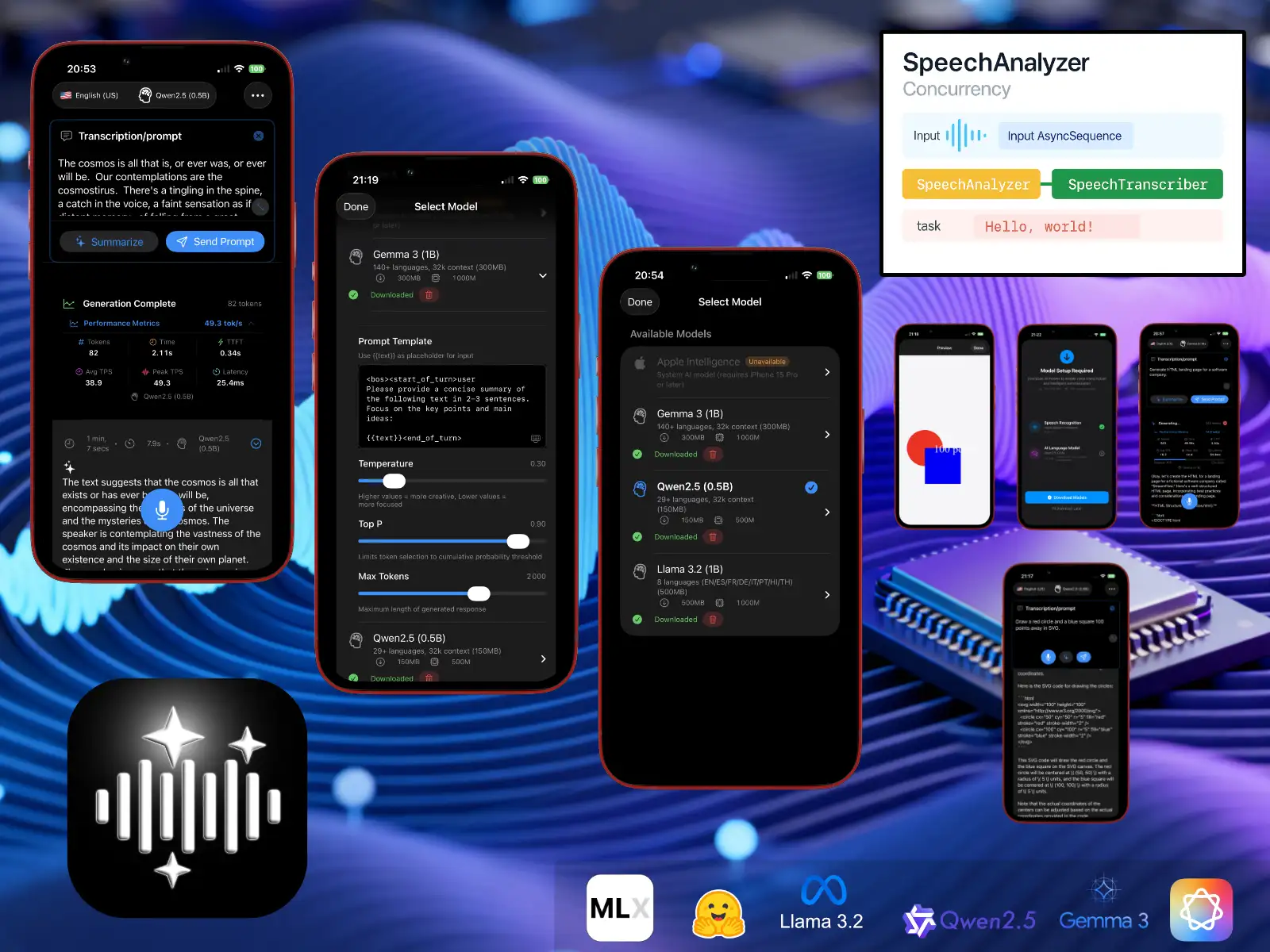

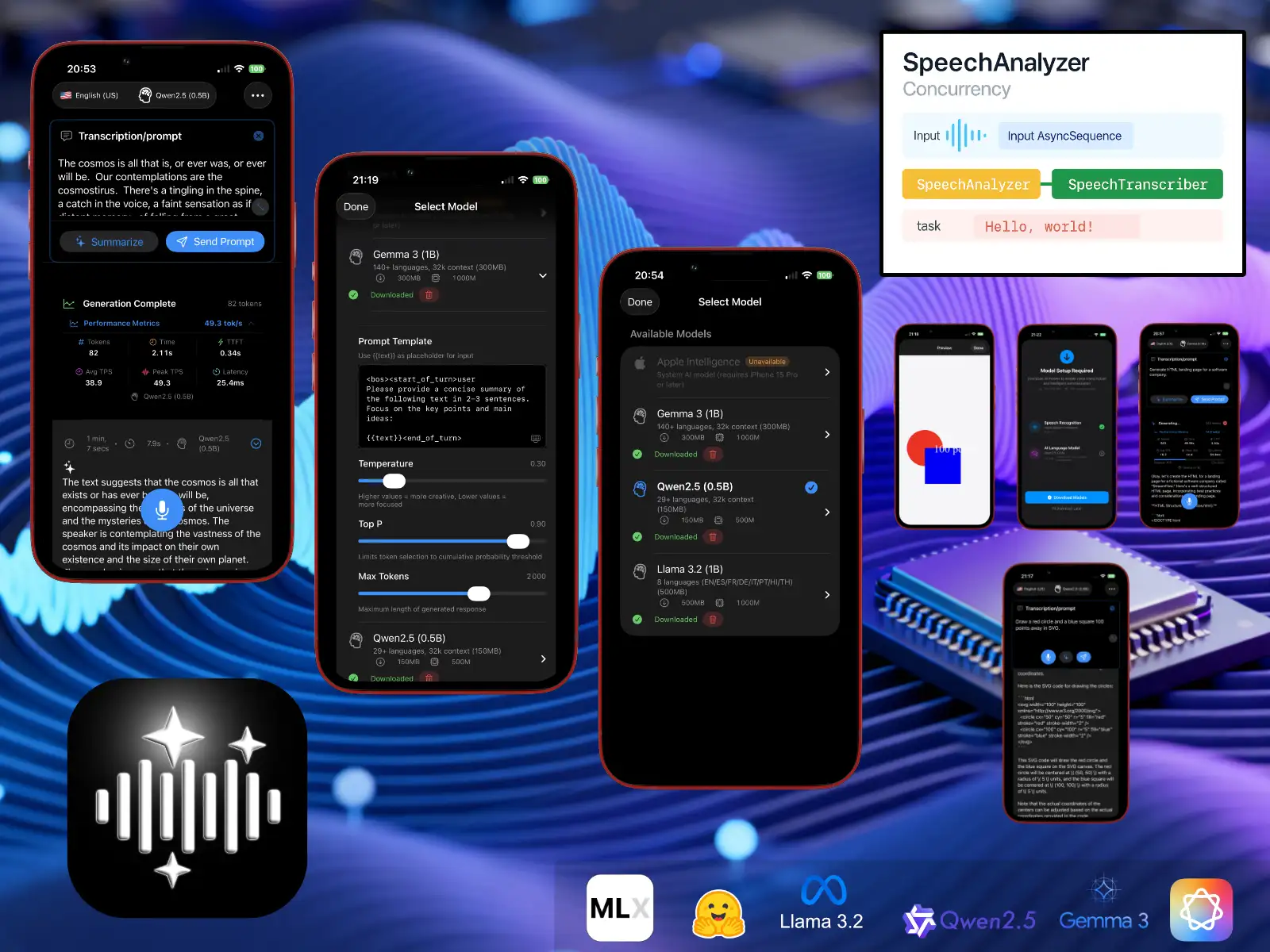

LLMVoice 1.0

Here is LLMVoice, a technical demo designed to evaluate the new iOS 26 speech recognition APIs (SpeechAnalyzer / SpeechTranscriber) and the execution of open-weight LLM models (quantized in 4 bits) locally on an iPhone that supports the MLX framework. You can either summarize a transcription or send a prompt directly to the model.

Let’s be honest — models this compressed aren’t particularly useful, but it’s still fun to watch them hallucinate. That said, they’re powerful enough to bring my old iPhone 13 to its knees!

Here are the available downloadable models:

Gemma3_1b (mlx-community/gemma-3-1b-it-4bit)

Qwen25_05b (lmstudio-community/Qwen2.5-0.5B-Instruct-MLX-4bit)

Llama32_1b (mlx-community/Llama-3.2-1B-Instruct-4bit)

Source Code

LLMVoice source codeSystem Requirements

iOS 26.0+ / iPadOS 26.0+

For Apple Intelligence

iPhone 15 Pro or later

Apple Intelligence enabled in Settings

For local MLX models

iPhone 12 or later (A14 Bionic chip or higher).

iPad Pro 2021 or later (M1 chip or higher).

iPad Air 5th generation or later (M1 chip or higher).

Metal GPU with specific features: air.simd_sum, rmsfloat16 kernel support.

Storage

App size: about 50 MB

Model downloads (MLX only):

Qwen2.5 (0.5B): about 150 MB

Gemma 3 (1B): about 300 MB

Llama 3.2 (1B): about 500 MB

🛟 Support

Need help or want to share feedback? Email support@andrefrelicot.dev.